How to Use AI Agents: A Practical Guide for 2026

Learn how to use ai agents effectively with step-by-step guidance on design, governance, integration, and evaluation for teams and developers in 2026.

According to Ai Agent Ops, AI agents are autonomous software entities that use AI models and tools to perform tasks, reason, and act on your behalf. If you're wondering how to use ai agents, this guide helps you design, deploy, and govern agentic workflows so your team can automate complex processes. By the end, you'll know how to start with a pilot and scale responsibly.

Foundations: What is an AI agent and why it matters

AI agents are autonomous or semi-autonomous software systems that use a combination of large language models, tools, and data sources to perform tasks, reason, and take actions with minimal human prompting. They can manage workflows, fetch information, synthesize insights, and trigger downstream processes across apps and databases. For developers and product teams, understanding this capability is essential because it enables a new class of automation: not just scripted steps, but adaptable agents that can plan, decide, and adjust as conditions change. If you're wondering how to use ai agents in your organization, you’ll want a clear plan that covers objectives, governance, and safety from day one. According to Ai Agent Ops, well-designed agent architectures reduce integration friction and accelerate time-to-value when paired with observable metrics and a lightweight governance layer. The goal is to obtain reliable results while preserving control and auditability, even as you scale. In practice, you start small with a single end-to-end use case and expand only after you prove value and safety.

Core capabilities and design patterns

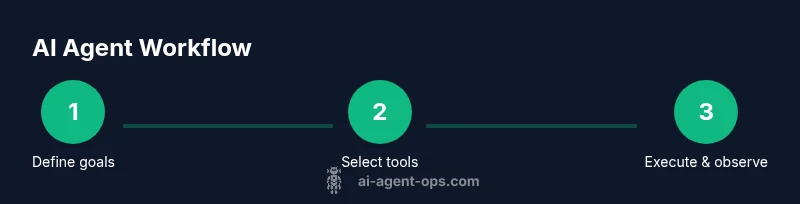

Modern AI agents excel when they combine three pillars: reasoning, action, and observation. They reason over goals and constraints, select tools (like web search, calendar, or data queries), execute tasks, and observe outcomes to adjust behavior. For teams building agentic workflows, common patterns include orchestrated agents that delegate subtasks to specialized agents, chained prompts for multi-step tasks, and stateful memory to maintain context across sessions. When choosing how to use ai agents, map business problems to agent roles: a data-collection agent, a decision-support agent, and an automation agent that triggers operational tasks. LSI keywords to consider include agent orchestration, llms integration, tool use, safety prompts, and governance. A practical tip: start with a pilot that addresses a single end-to-end process before expanding to parallel workflows, so you can measure speed and accuracy gains without overwhelming the system. Ground rules like prompt discipline, versioning, and fallback behavior help keep complexity manageable. Ground rules like prompt discipline, versioning, and fallback behavior help keep complexity manageable.

Architecture and platform choices

Your agent solution should start with a lightweight architecture that can scale. The core components include an LLM driver, a tool registry, a context store, and a safe execution layer that enforces policies. Many teams prefer agent orchestration platforms that manage task flows, retries, and failure modes. When selecting an environment, consider the provider's compatibility with your data sources, authentication methods, and security requirements. For example, you may combine an open-source agent framework with a managed LLM service for cost efficiency and governance. In practice, a modular design makes it easier to swap tools or pipelines as needs evolve. When you ask how to use ai agents in production, this modularity allows teams to pilot one capability and later layer additional channels like RPA, chat interfaces, or monitoring dashboards.

Data, tools, and integration

AI agents rely on tools and data to operate. This means you’ll need connectors to your CRM, databases, messaging apps, file storage, and external APIs. Define data boundaries: what data can be accessed, what must be logged, and how to handle PII or sensitive information. Tools should be cataloged in a tool registry with clear input/output contracts. The integration layer should support observability so you can trace decisions and outcomes back to prompts and tool results. It's also critical to design idempotent actions where repeated executions don’t produce inconsistent results. If a task fails midway, the agent should gracefully retry with backoff and preserve any partial progress to avoid data duplication or inconsistent states. Ai Agent Ops analysis shows that robust data integration reduces downstream errors and speeds iteration.

Governance, safety, and risk management

Safety in agentic AI is not optional. Establish guardrails, access controls, and monitoring to prevent unintended actions. Create a policy that defines allowed tool types, rate limits, and failure-handling rules. Implement attribution and logging so you can audit decisions. Consider data privacy, compliance requirements, and vendor risk. Ai Agent Ops recommends embedding a lightweight governance model from day one to reduce surprises when the system scales. Build a playbook outlining escalation paths, rollback procedures, and roles so your team can respond quickly if something goes wrong. To keep the human in the loop when needed, set an explicit trigger for human review in high-stakes scenarios.

Prompt design and policy creation

Prompts are the primary interface to your AI agents. Start with a concise system prompt that defines the agent’s role, goals, and constraints, followed by task-specific prompts. Build a policy layer that governs tool usage, data access, and fallback behavior. Use few-shot examples to illustrate correct patterns and edge cases. Include explicit failure modes so the agent can handle exceptions gracefully. Keep prompts versioned and tested in a sandbox before production. As you learn, update prompts to improve reliability, precision, and safety. A strong policy layer helps ensure consistent behavior even when external tools behave unexpectedly.

Pilot planning and metrics

Plan a controlled pilot with clear success criteria. Define a target process, success metrics (e.g., accuracy, latency, cost), and a rollback plan. Run the pilot in a staging environment, using synthetic data if possible, and monitor behavior continuously. Collect logs, decision rationales, and tool outputs to build a feedback loop that informs prompt and policy refinements. Use A/B testing where feasible to compare agentic approaches against traditional automation. Document lessons learned to accelerate future cycles and justify further investment in AI-powered workflows. This is where you begin to see how to use ai agents in real-world contexts and adjust based on outcomes.

Scaling, operations, and cost management

As you move beyond a single pilot, you’ll need robust observability, change management, and cost controls. Implement dashboards that show policy coverage, decision quality, and tool utilization. Establish attack surfaces to guard against data leakage, misconfigurations, and runaway costs. Adopt a capability-based plan: add agents for new domains in small, measurable increments, tracking cost per task and return on investment. In Ai Agent Ops’s experience, governance disciplines compound the value of agentic workflows by reducing risk during rapid expansion. Prepare for ongoing maintenance, dependency updates, and continuous policy refinement as your team learns what works best at scale.

Templates, playbooks, and next steps

This section provides ready-to-use templates for prompts, policies, and runbooks to accelerate your first 90 days with AI agents. Start with a starter prompt that defines a role, goals, and constraints, followed by a policy doc that limits tool usage and data access. Build a playbook for incident response and an escalation path to humans when confidence is low. For next steps, plan a 2–4 week sprint to extend the pilot, document outcomes, and socialize learnings with stakeholders. The Ai Agent Ops team recommends you begin with a clearly scoped, low-risk process and then expand, always watching for safety and governance signals. In addition, archive pilot learnings to inform future projects and stakeholder communications.

Tools & Materials

- Laptop or workstation with internet access(For coding, testing, and evaluation)

- Access to an LLM provider (e.g., OpenAI or equivalent)(API key and plan adequate for the pilot)

- Test/staging environment(Isolate experiments from production)

- Prompts, policies, and templates(Starter prompts, guardrails, and playbooks)

- Tool registry and documentation(Catalog of connectors and contracts)

- Secret management and security practices(Store keys securely; rotate credentials)

Steps

Estimated time: 2-4 hours

- 1

Define objective and constraints

Start by articulating the end goal, success criteria, and any guardrails. Specify what success looks like and what actions are off-limits. This clarity drives the rest of the design.

Tip: Write down the top 3 measurable outcomes before drafting prompts. - 2

Choose architecture and platform

Select an architecture that balances flexibility and safety: an LLM driver, tool registry, and policy layer. Prefer modular components for easier upgrades.

Tip: Prefer a modular setup so you can swap tools without rewriting prompts. - 3

Design prompts and policies

Create a concise system prompt plus task prompts, accompanied by robust policies for data access and tool usage. Include failure modes.

Tip: Version-control prompts and simulate edge cases in a sandbox. - 4

Connect data sources and tools

Register connectors to your data sources and tools with clear inputs/outputs. Define data boundaries and logging requirements.

Tip: Document data access rules and ensure PII handling is compliant. - 5

Build and test pilot workflow

Assemble the end-to-end flow and validate with realistic scenarios in a staging environment. Check for reliability and repeatability.

Tip: Use synthetic data to avoid exposing real customer information. - 6

Run pilot and monitor results

Execute the pilot, monitor decision quality, latency, and tool success rates. Collect logs and decision rationales for analysis.

Tip: Set alert thresholds for quality and safety breaches. - 7

Analyze results and iterate

Review outcomes, refine prompts and policies, and address any failure modes uncovered during the pilot.

Tip: Iterate in small, controlled cycles to reduce risk. - 8

Scale responsibly

Plan staged rollouts, widen governance coverage, and extend observability as you add domains or users.

Tip: Keep a tight feedback loop and document governance decisions.

Questions & Answers

What are AI agents?

AI agents are autonomous software entities that use AI models and tools to perform tasks, make decisions, and take actions with limited human input.

AI agents are smart software that can act on tasks with minimal human help.

How do I start with AI agents quickly?

Begin with a narrowly scoped pilot in a staging environment, using ready-to-run templates and a simple governance plan.

Start small with a pilot in a safe space and build from there.

What is agent orchestration?

Agent orchestration coordinates multiple agents and tools to achieve a goal, handling handoffs, retries, and failures.

Think of it as a conductor guiding several agents and tools.

How do you measure ROI for AI agents?

ROI comes from time saved, improved accuracy, and faster time-to-value; track pilot metrics and cost per action.

Track time saved and cost per task to gauge value.

What are common safety risks?

Risks include data leakage, unintended actions, and bias; mitigate with prompts, policies, and monitoring.

Be mindful of leaks and wrong actions; monitor continuously.

How can I scale AI agents responsibly?

Scale through governance, observability, and incremental rollouts; ensure containment and human oversight when needed.

Scale in steps with checks and clear escalation paths.

Key Takeaways

- Define clear objectives and success metrics

- Design robust prompts and governance from day one

- Pilot thoroughly before scaling to production

- Governance and observability are essential for safe scale